Here is a situation, I have a trained ML model and I want to parse both strings and numbers as features to my model and the reason is; imaginatively speaking, I hope the Hotel manager-who doesn’t know much about tech will be able to use my trained model by selecting their concerns from the html file.

Assuming

that you have knowledge of ML modelling then this article promises to help you

with the basics of parsing strings and numbers to you machine learning model

for prediction.

Therefore, in this tutorial we shall look at the following:

1. Building a Machine learning model that accepts strings and passthrough’ numbers for predictions.

2. Building an interactive user interface to parse form inputs to the trained model to make predictions.

3. Using Flask to build a REST API to deploy the model locally.

By ensuring

these three concerns are met then any authorized user can interact with the

model from the local machines or cloud.

What will our model do? Our model will be able to predict the type of food that will be ordered (DV-Dependent Variable) based on Age, Nationality, Gender, Dessert, Juice (IV-independent Variable). The model is based on this dataset from Kaggle, I had to modify it a bit-added some more classes to our target variable.

Let’s go for commercial break please!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

The main purpose of this

Tutorial is demonstrating how your model can accept string user inputs

especially, transform them and then make Predictions.

Step 1. Building a machine

learning model.

There are 5 steps that I feel will be key in this section:

A. We start by reading the csv file and then using Matplotlib we plot out the relevant relationships to help visualize variables better. This process will help to determine which features will influence your model performance considering your target variable.

In this dataset there is only one continuous variable and that is Age, my target variable being a categorical data-Food, then you might notice that the distribution of the observations might inspire the use of modelling algorithms such us logistic regression, Ensemble techniques, Naïve Bayes and Decision trees as opposed to say Linear regression. However, it is important that we try different modeling algorithms as we perform parameter tuning with the aim of getting the best optimized model using the R2 score.

B. Dividing the dataset into training and Testing and storing the categorical features in a variable for preprocessing.

The focus

here after splitting the dataset into train and test is to store the

categorical features in a variable that will be passed to the column

transformer for imputing and encoding.

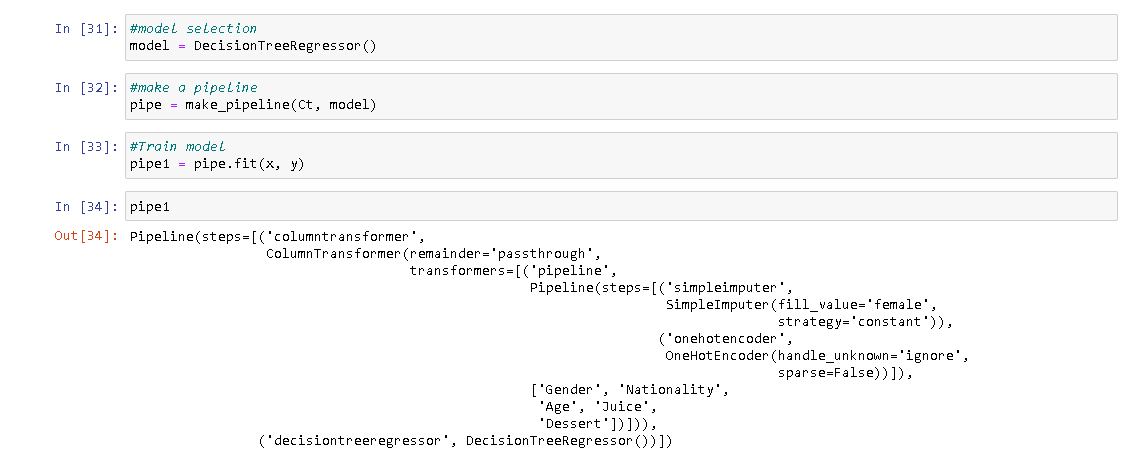

C. Pipelines and Column Transformers.

We are going to create a pipeline that contains preprocessing steps and

use it within the column transformer to process the categorical columns that we

are representing as “xcols”. We shall impute missing values and one-hot encode them

with the parameter “add_indicator” and “handle_unknown” set to “False” and “

ignore” respectively because we don’t need to encode the automatically

generated columns holding Boolean values indicating where the transformation

occurred and if case any other column is generated after encoding we are simply

just ignore it. We transform all categorical features/columns and we pass

“Age” column since it’s a number already.

The difference between how pipelines and columns transformers preprocess features is with a column transformer different features are transformed using different or same preprocessing steps but, in a pipeline, multiple transformations are applied on the same column(s).

Then

lastly, we transform the target variable which in this case Is “Food” using the

label encoder.

D. Model selection, fitting and

predicting.

DecisionTreeRegressor model gave out the best coefficient of determination

even without any feature engineering applied. According to scikit-learn 0.24.1 pipelines

offer the same API as a regular estimator meaning it has functions for training

and making predictions.

E. Using Pandas Data Frame to create

out of sample data.

This

section and section D form the foundation of this Tutorial.

We expect

to capture user input and parse it to our model to make predictions, therefore,

we need to create an unseen observation and pass' it to our model. I have done

this by using a dictionary then converted the dictionary to pandas data frame with

the index parameter set to “289” because my rows end at “288”.

The index

parameter accepts any value from zero it will not affect the preprocessing.

Step 2. Developing a bootstrap user interface for

user interaction.

Basically,

the import bit here is pointing your form data to the correct decorator url function using “url_for()” method and lastly, rendering the output of the model

is facilitated by Jinja templates render_template method with “{{_}}”

delimiters put in the html page for model output.

Jinja

template engine is simply Flask’s solution for generating html within python

securely and automatically.

Step 3. Creating a Rest API using flask to serve

user requests to the model.

To put it

in a very simple way Flask is the “engine” upon which the entire vehicle is

built on. You can safely say that both the Html page and the model runs on

Flask.

So, we use the “request. form”

which means that the request object has an attribute called form that accesses

form data using the keys(xcols) and store it in the “qwert” variables. Then, we

use these variables to from a dictionary called “damp” afterwards, we convert

the dictionary into a panda’s data frame called “test” consequently, parsing

the “test” sample to the predictive model. Finally, storing the outcome of the

prediction in a variable called “result”. I have written a for loop because the

model outputs the value in a list hence, once I get the float value I run it

through the conditions in the if_statements that I created by comparing the

transformed labels to the classes generated from the categorical target

“Food".

Summary.

Basically

speaking, there are only two concepts that you need to wrap your head around:

1. Use pipelines to chain the pre-processing and the model building steps together, we do this because the user inputs are strings so we will need to transform them before we predict them.

2. Since user inputs are out of sample features then we need to create a dictionary then convert it into a pandas data frame which we shall parse to our model for predictions.

Thank you!

Good job! Futuristic💯💯

ReplyDelete